网站公告

more- Crickbet99 E... 25-03-22 20:35

- Just How To ... 25-03-22 20:34

- Lotus365 Bet... 25-03-22 20:32

- Lotus365 Bet... 25-03-22 20:31

How Essential Is Deepseek Ai. 10 Professional Quotes

Laurene38L1834178551 2025.03.21 11:27 查看 : 2

Each of these moves are broadly consistent with the three important strategic rationales behind the October 2022 controls and their October 2023 update, which intention to: (1) choke off China’s access to the way forward for AI and high efficiency computing (HPC) by limiting China’s entry to superior AI chips; (2) prevent China from obtaining or domestically producing alternatives; and (3) mitigate the income and profitability impacts on U.S. While US corporations, including OpenAI, have been focused on enhancing computing energy to deliver extra sophisticated fashions, China’s AI ecosystem has taken a unique route, prioritizing effectivity and innovation despite hardware limitations. 2. The DeepSeek controversy highlights key challenges in AI growth, together with ethical considerations over knowledge utilization, mental property rights, and worldwide competitors. But, like many fashions, it faced challenges in computational effectivity and scalability. This means they efficiently overcame the previous challenges in computational effectivity! GPUs are a means to an finish tied to particular architectures which might be in vogue right now. Now to another DeepSeek large, DeepSeek-Coder-V2!

This time developers upgraded the previous version of their Coder and now DeepSeek-Coder-V2 helps 338 languages and 128K context length. DeepSeekMoE is applied in the most powerful DeepSeek fashions: DeepSeek V2 and DeepSeek-Coder-V2. MoE in Free DeepSeek Ai Chat-V2 works like DeepSeekMoE which we’ve explored earlier. Transformer architecture: At its core, DeepSeek-V2 makes use of the Transformer structure, which processes textual content by splitting it into smaller tokens (like words or subwords) after which makes use of layers of computations to understand the relationships between these tokens. Initially, DeepSeek created their first mannequin with structure similar to other open fashions like LLaMA, aiming to outperform benchmarks. Before changing into a crew of 5, the primary public demonstration occurred at the International 2017, the annual premiere championship tournament for the game, the place Dendi, a professional Ukrainian player, lost against a bot in a reside one-on-one matchup. Certainly one of the reasons DeepSeek is making headlines is because its improvement occurred regardless of U.S. That is exemplified of their DeepSeek-V2 and DeepSeek-Coder-V2 fashions, with the latter extensively considered one of many strongest open-source code fashions available. However, small context and poor code generation remain roadblocks, and i haven’t but made this work effectively.

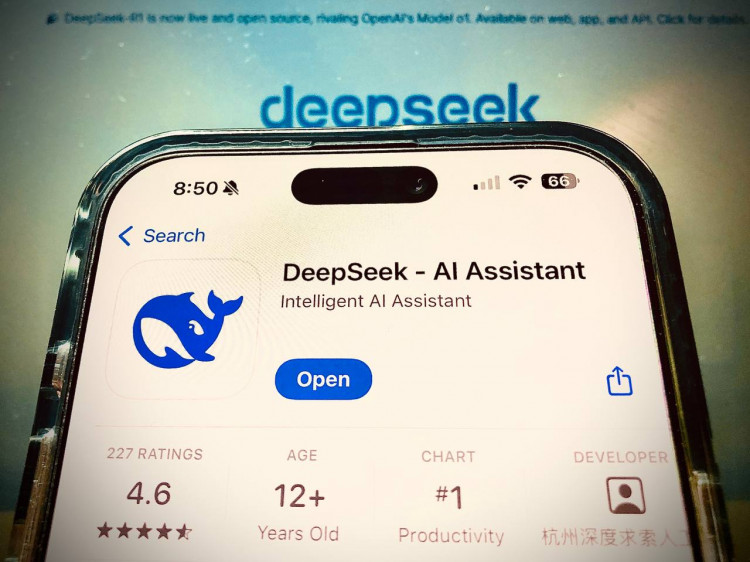

This particular model has a low quantization quality, so regardless of its coding specialization, the quality of generated VHDL and SystemVerilog code are both fairly poor. 1,170 B of code tokens were taken from GitHub and CommonCrawl. Managing extremely long textual content inputs as much as 128,000 tokens. Go to Toolbox on the home screen and select AI Text to Video from the record of Filmora’s instruments. Artificial intelligence has revolutionized communication, offering users tools able to dynamic, meaningful interactions. They gave customers access to a smaller version of the newest mannequin, o3-mini, final week. That enables apps that gain installs rapidly to skyrocket to the highest of the charts, overtaking others that will have a bigger complete variety of customers or installs. The $5.6 million quantity solely included really training the chatbot, not the costs of earlier-stage research and experiments, the paper mentioned. DeepSeek's pricing is significantly lower across the board, with input and output costs a fraction of what OpenAI fees for GPT-4o. But what truly propelled DeepSeek's recognition is the truth that it's open supply, as well as its pricing.

This particular model has a low quantization quality, so regardless of its coding specialization, the quality of generated VHDL and SystemVerilog code are both fairly poor. 1,170 B of code tokens were taken from GitHub and CommonCrawl. Managing extremely long textual content inputs as much as 128,000 tokens. Go to Toolbox on the home screen and select AI Text to Video from the record of Filmora’s instruments. Artificial intelligence has revolutionized communication, offering users tools able to dynamic, meaningful interactions. They gave customers access to a smaller version of the newest mannequin, o3-mini, final week. That enables apps that gain installs rapidly to skyrocket to the highest of the charts, overtaking others that will have a bigger complete variety of customers or installs. The $5.6 million quantity solely included really training the chatbot, not the costs of earlier-stage research and experiments, the paper mentioned. DeepSeek's pricing is significantly lower across the board, with input and output costs a fraction of what OpenAI fees for GPT-4o. But what truly propelled DeepSeek's recognition is the truth that it's open supply, as well as its pricing.

Free Deepseek Online chat fashions quickly gained popularity upon launch. Reasoning fashions are relatively new, and use a method referred to as reinforcement studying, which essentially pushes an LLM to go down a chain of thought, then reverse if it runs into a "wall," before exploring numerous different approaches earlier than getting to a ultimate reply. Their revolutionary approaches to consideration mechanisms and the Mixture-of-Experts (MoE) approach have led to impressive effectivity gains. This led the DeepSeek AI crew to innovate additional and develop their very own approaches to resolve these present issues. DeepSeek Ai Chat took down the dataset "in less than an hour" after changing into conscious of the breach, in line with Ami Luttwak, Wiz’s chief technology officer. Fine-grained expert segmentation: DeepSeekMoE breaks down every knowledgeable into smaller, more focused components. Another key trick in its toolkit is Multi-Token Prediction, which predicts a number of elements of a sentence or problem simultaneously, speeding issues up significantly. However, such a posh massive model with many concerned components nonetheless has several limitations. This permits the model to course of information faster and with much less memory with out dropping accuracy. The router is a mechanism that decides which expert (or specialists) should handle a specific piece of knowledge or job.

?? 0

Copyright © youlimart.com All Rights Reserved.鲁ICP备18045292号-2 鲁公网安备 37021402000770号