网站公告

more- Eight Steps ... 25-03-23 21:28

- Exactly How ... 25-03-23 15:40

- Just How To ... 25-03-23 15:39

- How To Regis... 25-03-23 15:30

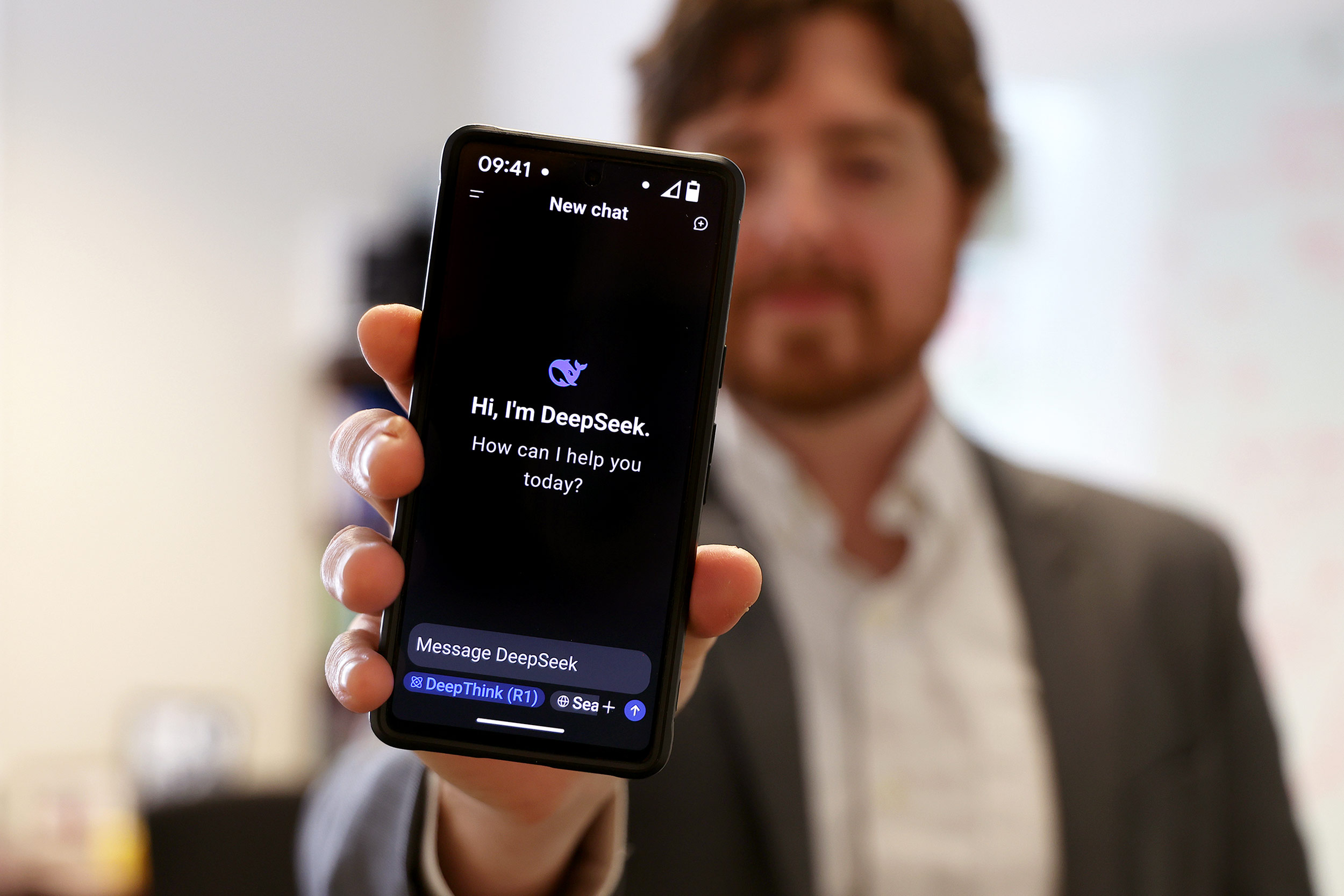

Three No Price Ways To Get Extra With Deepseek

ChristinaVarela7164 2025.03.21 21:57 查看 : 2

HuggingFace reported that DeepSeek models have more than 5 million downloads on the platform. In a joint submission with CoreWeave and NVIDIA, the cluster completed the reference coaching task for large language fashions in just eleven minutes, solidifying its position because the fastest cluster on this benchmark. On FRAMES, a benchmark requiring query-answering over 100k token contexts, DeepSeek-V3 closely trails GPT-4o while outperforming all other models by a significant margin. GPT-3 didn’t support long context windows, but when for the second we assume it did, then every extra token generated at a 100K context length would require 470 GB of memory reads, or around 140 ms of H100 time given the H100’s HBM bandwidth of 3.Three TB/s. This rough calculation shows why it’s crucial to find ways to scale back the size of the KV cache when we’re working with context lengths of 100K or above. DeepSeek-R1 exhibits strong performance in mathematical reasoning tasks. Due to the poor efficiency at longer token lengths, here, we produced a brand new version of the dataset for each token size, wherein we solely kept the functions with token size a minimum of half of the goal variety of tokens.

HuggingFace reported that DeepSeek models have more than 5 million downloads on the platform. In a joint submission with CoreWeave and NVIDIA, the cluster completed the reference coaching task for large language fashions in just eleven minutes, solidifying its position because the fastest cluster on this benchmark. On FRAMES, a benchmark requiring query-answering over 100k token contexts, DeepSeek-V3 closely trails GPT-4o while outperforming all other models by a significant margin. GPT-3 didn’t support long context windows, but when for the second we assume it did, then every extra token generated at a 100K context length would require 470 GB of memory reads, or around 140 ms of H100 time given the H100’s HBM bandwidth of 3.Three TB/s. This rough calculation shows why it’s crucial to find ways to scale back the size of the KV cache when we’re working with context lengths of 100K or above. DeepSeek-R1 exhibits strong performance in mathematical reasoning tasks. Due to the poor efficiency at longer token lengths, here, we produced a brand new version of the dataset for each token size, wherein we solely kept the functions with token size a minimum of half of the goal variety of tokens.

According to knowledge from Exploding Topics, interest in the Chinese AI company has increased by 99x in just the final three months as a consequence of the release of their newest mannequin and chatbot app. Navy banned its personnel from using DeepSeek's functions as a result of security and moral concerns and uncertainties. Impressively, they’ve achieved this SOTA performance by only utilizing 2.Eight million H800 hours of coaching hardware time-equivalent to about 4e24 FLOP if we assume 40% MFU. Comprehensive evaluations reveal that DeepSeek-V3 has emerged because the strongest open-source model at the moment out there, and achieves performance comparable to main closed-supply models like GPT-4o and Claude-3.5-Sonnet. Performance benchmarks of DeepSeek-RI and OpenAI-o1 models. Feedback from customers helps improve its efficiency and accuracy. While OpenAI's o1 maintains a slight edge in coding and factual reasoning tasks, DeepSeek-R1's open-supply access and low costs are interesting to users. The other noticeable difference in prices is the pricing for every mannequin. DeepSeek's pricing is significantly decrease throughout the board, with input and output prices a fraction of what OpenAI fees for GPT-4o. This determine is significantly lower than the a whole bunch of hundreds of thousands (or billions) American tech giants spent creating different LLMs. A few of the most typical LLMs are OpenAI's GPT-3, Anthropic's Claude and Google's Gemini, or dev's favourite Meta's Open-supply Llama.

According to knowledge from Exploding Topics, interest in the Chinese AI company has increased by 99x in just the final three months as a consequence of the release of their newest mannequin and chatbot app. Navy banned its personnel from using DeepSeek's functions as a result of security and moral concerns and uncertainties. Impressively, they’ve achieved this SOTA performance by only utilizing 2.Eight million H800 hours of coaching hardware time-equivalent to about 4e24 FLOP if we assume 40% MFU. Comprehensive evaluations reveal that DeepSeek-V3 has emerged because the strongest open-source model at the moment out there, and achieves performance comparable to main closed-supply models like GPT-4o and Claude-3.5-Sonnet. Performance benchmarks of DeepSeek-RI and OpenAI-o1 models. Feedback from customers helps improve its efficiency and accuracy. While OpenAI's o1 maintains a slight edge in coding and factual reasoning tasks, DeepSeek-R1's open-supply access and low costs are interesting to users. The other noticeable difference in prices is the pricing for every mannequin. DeepSeek's pricing is significantly decrease throughout the board, with input and output prices a fraction of what OpenAI fees for GPT-4o. This determine is significantly lower than the a whole bunch of hundreds of thousands (or billions) American tech giants spent creating different LLMs. A few of the most typical LLMs are OpenAI's GPT-3, Anthropic's Claude and Google's Gemini, or dev's favourite Meta's Open-supply Llama.

It's because cache reads are usually not Free DeepSeek: we want to avoid wasting all those vectors in GPU high-bandwidth memory (HBM) after which load them into the tensor cores when we have to contain them in a computation. A: They didn’t. They only tinkered round with their chips to verify they handled memory as effectively as presumably. We permit it to look Semantic Scholar to make sure its thought is novel. 9.2 Within the event of a dispute arising from the signing, efficiency, or interpretation of these Terms, the Parties shall make efforts to resolve it amicably by way of negotiation. DeepSeek-V3 demonstrates aggressive efficiency, standing on par with top-tier models comparable to LLaMA-3.1-405B, GPT-4o, and Claude-Sonnet 3.5, while significantly outperforming Qwen2.5 72B. Moreover, DeepSeek-V3 excels in MMLU-Pro, a extra challenging academic information benchmark, where it carefully trails Claude-Sonnet 3.5. On MMLU-Redux, a refined version of MMLU with corrected labels, DeepSeek-V3 surpasses its friends. The model employs reinforcement studying to prepare MoE with smaller-scale models.

DeepSeek-Prover-V1.5 is a system that combines reinforcement studying and Monte-Carlo Tree Search to harness the feedback from proof assistants for improved theorem proving. This series includes massive language models, multimodal fashions, mathematical models, and code models-over one hundred variations in complete. DeepSeek-V3 marked a major milestone with 671 billion whole parameters and 37 billion lively. It featured 236 billion parameters, a 128,000 token context window, and assist for 338 programming languages, to handle more complex coding duties. DeepSeek-Coder-V2 expanded the capabilities of the unique coding model. DeepSeek-R1 is the corporate's newest mannequin, focusing on advanced reasoning capabilities. On Codeforces, OpenAI o1-1217 leads with 96.6%, whereas DeepSeek-R1 achieves 96.3%. This benchmark evaluates coding and algorithmic reasoning capabilities. For SWE-bench Verified, Deepseek Online chat-R1 scores 49.2%, barely ahead of OpenAI o1-1217's 48.9%. This benchmark focuses on software program engineering tasks and verification. On AIME 2024, it scores 79.8%, barely above OpenAI o1-1217's 79.2%. This evaluates advanced multistep mathematical reasoning. On GPQA Diamond, OpenAI o1-1217 leads with 75.7%, while DeepSeek-R1 scores 71.5%. This measures the model’s means to reply general-function knowledge questions. Optional: Microphone to ask questions. The fact that this works in any respect is stunning and raises questions on the importance of position information across lengthy sequences.

If you have any thoughts regarding where by and how to use Free DeepSeek Ai Chat, you can get hold of us at our own web site.

?? 0

Copyright © youlimart.com All Rights Reserved.鲁ICP备18045292号-2 鲁公网安备 37021402000770号