网站公告

more- Diyarbakır E... 25-03-26 01:01

- İnce Belli S... 25-03-26 00:53

- Gösteriş Tut... 25-03-26 00:51

- Diyarbakır E... 25-03-26 00:50

The Untold Secret To Mastering Deepseek In Just Ten Days

ErnieBadilla0137394 2025.03.23 11:14 查看 : 2

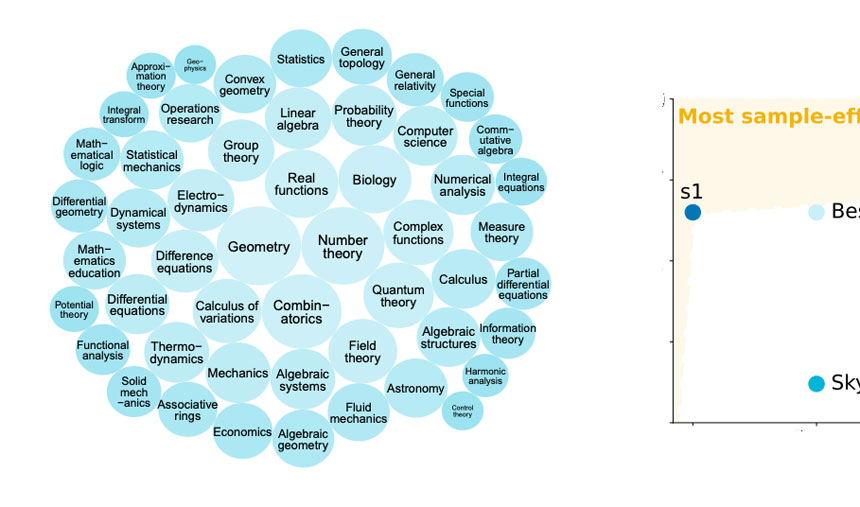

As shown in the diagram above, the DeepSeek staff used DeepSeek-R1-Zero to generate what they name "cold-start" SFT information. On this section, the latest model checkpoint was used to generate 600K Chain-of-Thought (CoT) SFT examples, while an additional 200K data-based mostly SFT examples were created using the DeepSeek-V3 base mannequin. 1. Inference-time scaling, a way that improves reasoning capabilities without coaching or in any other case modifying the underlying mannequin. However, this technique is often carried out at the appliance layer on prime of the LLM, so it is feasible that DeepSeek applies it within their app. The DeepSeek Chat V3 model has a high score on aider’s code modifying benchmark. The primary, Free DeepSeek Ai Chat-R1-Zero, was built on high of the DeepSeek-V3 base mannequin, an ordinary pre-educated LLM they released in December 2024. Unlike typical RL pipelines, where supervised superb-tuning (SFT) is utilized before RL, DeepSeek-R1-Zero was educated solely with reinforcement learning with out an initial SFT stage as highlighted in the diagram beneath.

The truth is, the SFT information used for this distillation process is identical dataset that was used to practice DeepSeek-R1, as described within the earlier part. The same may be stated about the proliferation of various open supply LLMs, like Smaug and DeepSeek, and open supply vector databases, like Weaviate and Qdrant. This RL stage retained the same accuracy and format rewards used in DeepSeek-R1-Zero’s RL process. And the RL has verifiable rewards along with human choice-based mostly rewards. In this stage, they again used rule-based methods for accuracy rewards for math and coding questions, while human desire labels used for different query sorts. The accuracy reward uses the LeetCode compiler to verify coding answers and a deterministic system to guage mathematical responses. For rewards, as an alternative of using a reward model trained on human preferences, they employed two varieties of rewards: an accuracy reward and a format reward. " second, the place the mannequin began generating reasoning traces as part of its responses despite not being explicitly trained to take action, as shown within the determine under.

The truth is, the SFT information used for this distillation process is identical dataset that was used to practice DeepSeek-R1, as described within the earlier part. The same may be stated about the proliferation of various open supply LLMs, like Smaug and DeepSeek, and open supply vector databases, like Weaviate and Qdrant. This RL stage retained the same accuracy and format rewards used in DeepSeek-R1-Zero’s RL process. And the RL has verifiable rewards along with human choice-based mostly rewards. In this stage, they again used rule-based methods for accuracy rewards for math and coding questions, while human desire labels used for different query sorts. The accuracy reward uses the LeetCode compiler to verify coding answers and a deterministic system to guage mathematical responses. For rewards, as an alternative of using a reward model trained on human preferences, they employed two varieties of rewards: an accuracy reward and a format reward. " second, the place the mannequin began generating reasoning traces as part of its responses despite not being explicitly trained to take action, as shown within the determine under.

While R1-Zero shouldn't be a prime-performing reasoning model, it does show reasoning capabilities by producing intermediate "thinking" steps, as proven within the figure above. The aforementioned CoT approach could be seen as inference-time scaling because it makes inference dearer through producing extra output tokens. All in all, this is very similar to regular RLHF besides that the SFT data contains (extra) CoT examples. Still, this RL course of is just like the commonly used RLHF approach, which is usually utilized to choice-tune LLMs. Note that it is actually common to incorporate an SFT stage earlier than RL, as seen in the usual RLHF pipeline. Using this cold-start SFT knowledge, DeepSeek then skilled the model by way of instruction effective-tuning, followed by another reinforcement studying (RL) stage. 3. Supervised fine-tuning (SFT) plus RL, which led to DeepSeek-R1, DeepSeek’s flagship reasoning mannequin. These distilled models function an attention-grabbing benchmark, exhibiting how far pure supervised fine-tuning (SFT) can take a mannequin with out reinforcement studying. This confirms that it is feasible to develop a reasoning model utilizing pure RL, and the DeepSeek group was the primary to reveal (or at the very least publish) this approach. OpenSourceWeek: DeepEP Excited to introduce DeepEP - the primary open-supply EP communication library for MoE mannequin coaching and inference.

That paper was about another DeepSeek AI model referred to as R1 that showed advanced "reasoning" abilities - equivalent to the ability to rethink its approach to a math downside - and was significantly cheaper than an analogous model offered by OpenAI known as o1. This implies they are cheaper to run, however they also can run on decrease-finish hardware, which makes these particularly attention-grabbing for a lot of researchers and tinkerers like me. Lightspeed Venture Partners venture capitalist Jeremy Liew summed up the potential drawback in an X publish, referencing new, cheaper AI training fashions akin to China’s DeepSeek: "If the coaching costs for the brand new DeepSeek fashions are even close to correct, it feels like Stargate could be getting ready to battle the last warfare. Next, let’s take a look at the event of DeepSeek-R1, DeepSeek’s flagship reasoning model, which serves as a blueprint for building reasoning models. Not solely does the country have entry to DeepSeek, but I believe that Free DeepSeek’s relative success to America’s leading AI labs will lead to an extra unleashing of Chinese innovation as they realize they will compete. DeepSeek’s IP investigation companies assist purchasers uncover IP leaks, swiftly identify their source, and mitigate damage. You can too confidently drive generative AI innovation by building on AWS companies which can be uniquely designed for security.

?? 0

Copyright © youlimart.com All Rights Reserved.鲁ICP备18045292号-2 鲁公网安备 37021402000770号