网站公告

more- Diyarbakır E... 25-03-26 01:01

- İnce Belli S... 25-03-26 00:53

- Gösteriş Tut... 25-03-26 00:51

- Diyarbakır E... 25-03-26 00:50

By No Means Lose Your Deepseek Chatgpt Again

FIECelinda916740 2025.03.23 11:17 查看 : 3

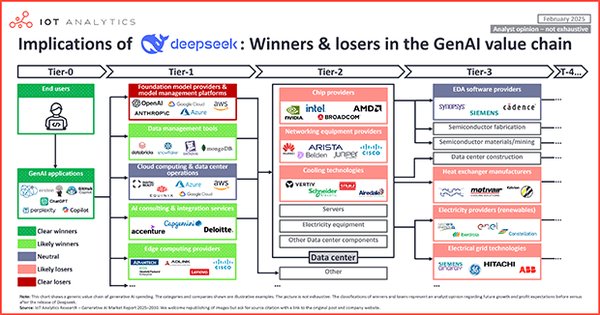

NVLink affords a bandwidth of 160 GB/s, roughly 3.2 occasions that of IB (50 GB/s). Youper options a mental well being-targeted AI chatbot, which converses with customers about their emotional struggles, and gives customized advice and techniques for how you can cope. Clearly, customers have noticed DeepSeek R1's prowess. While DeekSeek limited registrations, current customers had been still able to log on as common. There remains to be so much we don’t know. As well as, even in more basic scenarios with out a heavy communication burden, DualPipe nonetheless exhibits efficiency advantages. On this overlapping technique, we will make sure that both all-to-all and PP communication can be absolutely hidden throughout execution. The status of OpenAI - and other US corporations - because the world leaders in AI has been dramatically undermined this week by the sudden emergence of DeepSeek, a Chinese app that may emulate the performance of ChatGPT, apparently at a fraction of the associated fee. Bottom Line is DeepSeek’s emergence is a turning point in the AI race, driving significant market shifts. Nvidia shares tumbled 17% Monday, the most important drop since March 2020, erasing $589 billion from the company’s market capitalization. DeepSeek-V3 is educated on a cluster outfitted with 2048 NVIDIA H800 GPUs.

NVLink affords a bandwidth of 160 GB/s, roughly 3.2 occasions that of IB (50 GB/s). Youper options a mental well being-targeted AI chatbot, which converses with customers about their emotional struggles, and gives customized advice and techniques for how you can cope. Clearly, customers have noticed DeepSeek R1's prowess. While DeekSeek limited registrations, current customers had been still able to log on as common. There remains to be so much we don’t know. As well as, even in more basic scenarios with out a heavy communication burden, DualPipe nonetheless exhibits efficiency advantages. On this overlapping technique, we will make sure that both all-to-all and PP communication can be absolutely hidden throughout execution. The status of OpenAI - and other US corporations - because the world leaders in AI has been dramatically undermined this week by the sudden emergence of DeepSeek, a Chinese app that may emulate the performance of ChatGPT, apparently at a fraction of the associated fee. Bottom Line is DeepSeek’s emergence is a turning point in the AI race, driving significant market shifts. Nvidia shares tumbled 17% Monday, the most important drop since March 2020, erasing $589 billion from the company’s market capitalization. DeepSeek-V3 is educated on a cluster outfitted with 2048 NVIDIA H800 GPUs.

Free DeepSeek v3 claimed the model coaching took 2,788 thousand H800 GPU hours, which, at a price of $2/GPU hour, comes out to a mere $5.576 million. Each node within the H800 cluster comprises eight GPUs related by NVLink and NVSwitch inside nodes. ARG affinity scores of the consultants distributed on each node. Looking on the AUC values, we see that for all token lengths, the Binoculars scores are almost on par with random chance, by way of being ready to differentiate between human and AI-written code. To successfully leverage the totally different bandwidths of IB and NVLink, we limit every token to be dispatched to at most four nodes, thereby decreasing IB visitors. Across completely different nodes, InfiniBand (IB) interconnects are utilized to facilitate communications. Given the environment friendly overlapping strategy, the full DualPipe scheduling is illustrated in Figure 5. It employs a bidirectional pipeline scheduling, which feeds micro-batches from both ends of the pipeline concurrently and a major portion of communications may be absolutely overlapped. To be specific, in our cluster, cross-node GPUs are fully interconnected with IB, and intra-node communications are dealt with through NVLink.

Free DeepSeek v3 claimed the model coaching took 2,788 thousand H800 GPU hours, which, at a price of $2/GPU hour, comes out to a mere $5.576 million. Each node within the H800 cluster comprises eight GPUs related by NVLink and NVSwitch inside nodes. ARG affinity scores of the consultants distributed on each node. Looking on the AUC values, we see that for all token lengths, the Binoculars scores are almost on par with random chance, by way of being ready to differentiate between human and AI-written code. To successfully leverage the totally different bandwidths of IB and NVLink, we limit every token to be dispatched to at most four nodes, thereby decreasing IB visitors. Across completely different nodes, InfiniBand (IB) interconnects are utilized to facilitate communications. Given the environment friendly overlapping strategy, the full DualPipe scheduling is illustrated in Figure 5. It employs a bidirectional pipeline scheduling, which feeds micro-batches from both ends of the pipeline concurrently and a major portion of communications may be absolutely overlapped. To be specific, in our cluster, cross-node GPUs are fully interconnected with IB, and intra-node communications are dealt with through NVLink.

Secondly, we develop environment friendly cross-node all-to-all communication kernels to completely make the most of IB and NVLink bandwidths and conserve Streaming Multiprocessors (SMs) dedicated to communication. The implementation of the kernels is co-designed with the MoE gating algorithm and the network topology of our cluster. So as to ensure enough computational efficiency for DualPipe, we customize environment friendly cross-node all-to-all communication kernels (including dispatching and combining) to conserve the number of SMs devoted to communication. The following desk highlights the capabilities of DeepSeek-V3 towards earlier variations and other leading AI models throughout multiple classes, together with English proficiency, coding, mathematics, and Chinese language understanding. Therefore, DeepSeek-V3 doesn't drop any tokens throughout training. Our precept of sustaining the causal chain of predictions is similar to that of EAGLE (Li et al., 2024b), but its major goal is speculative decoding (Xia et al., 2023; Leviathan et al., 2023), whereas we make the most of MTP to enhance coaching. On the one hand, an MTP objective densifies the training signals and will improve data efficiency. 2024), we investigate and set a Multi-Token Prediction (MTP) objective for DeepSeek-V3, which extends the prediction scope to multiple future tokens at every position.

For DeepSeek-V3, the communication overhead launched by cross-node expert parallelism results in an inefficient computation-to-communication ratio of roughly 1:1. To tackle this problem, we design an progressive pipeline parallelism algorithm called DualPipe, which not only accelerates mannequin training by successfully overlapping forward and backward computation-communication phases, but also reduces the pipeline bubbles. With a view to facilitate efficient coaching of DeepSeek-V3, we implement meticulous engineering optimizations. The coaching of DeepSeek-V3 is supported by the HAI-LLM framework, an environment friendly and lightweight coaching framework crafted by our engineers from the bottom up. As a result of effective load balancing strategy, DeepSeek-V3 retains a superb load balance during its full training. Under this constraint, our MoE coaching framework can practically obtain full computation-communication overlap. Our MTP strategy primarily goals to improve the performance of the main mannequin, so during inference, we are able to straight discard the MTP modules and the primary model can function independently and normally. Additionally, we can even repurpose these MTP modules for speculative decoding to additional enhance the generation latency.

If you cherished this post and you would like to get much more information about DeepSeek Chat kindly check out the web-site.

?? 0

Copyright © youlimart.com All Rights Reserved.鲁ICP备18045292号-2 鲁公网安备 37021402000770号